Abstract

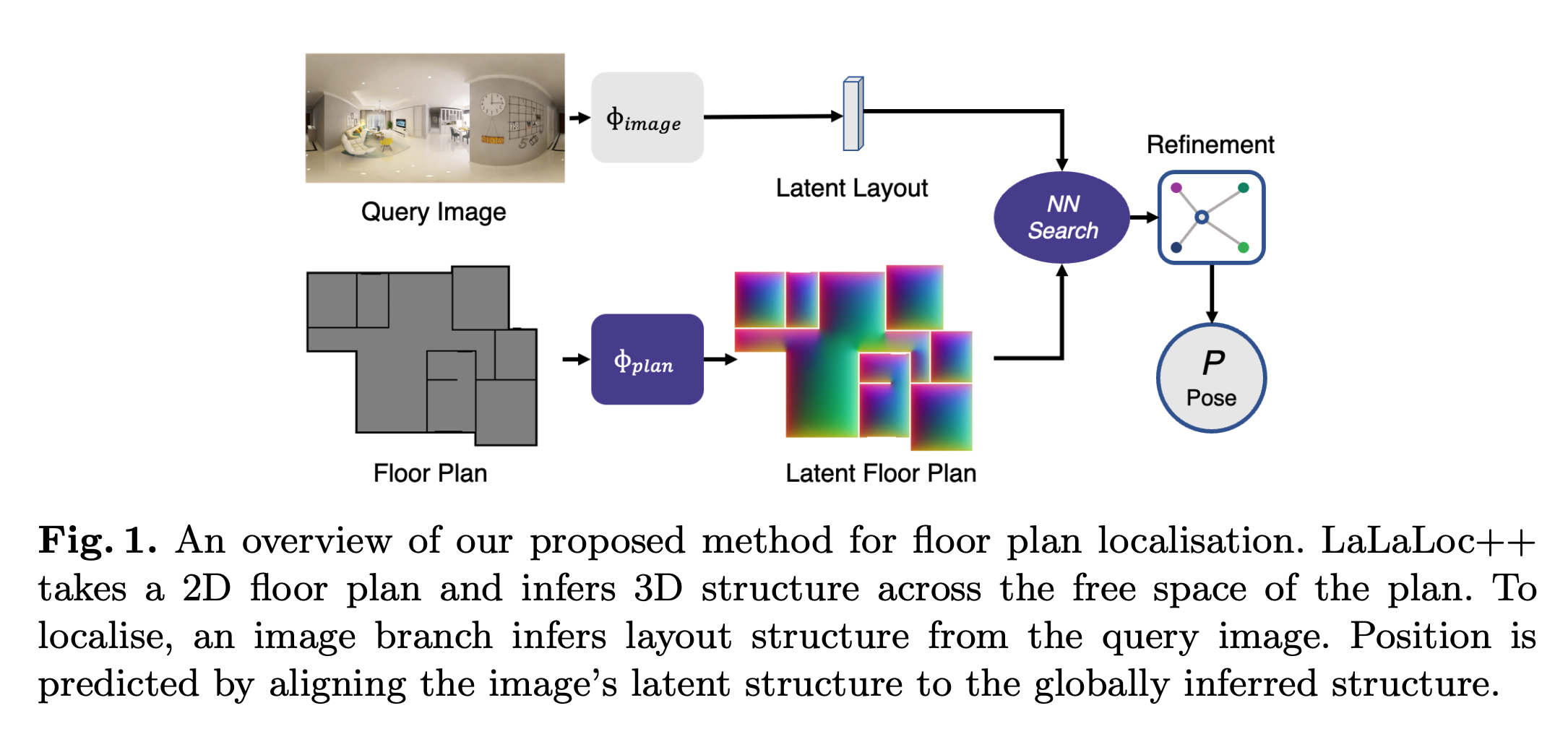

We present LaLaLoc++, a method for floor plan localisation in unvisited environments through latent representations of room layout. We perform localisation by aligning room layout inferred from a panorama image with the floor plan of a scene. To process a floor plan prior, previous methods required that the plan first be used to construct an explicit 3D representation of the scene. This process requires that assumptions be made about the scene geometry and can result in expensive steps becoming necessary, such as rendering. LaLaLoc++ instead introduces a global floor plan comprehension module that is able to efficiently infer structure densely and directly from the 2D plan, removing any need for explicit modelling or rendering. On the Structured3D dataset this module alone improves localisation accuracy by more than 31%, all while increasing throughput by an order of magnitude. Combined with the further addition of a transformer-based panorama embedding mod- ule, LaLaLoc++ improves accuracy over previous methods by more than 37% with dramatically faster inference.

Code

The code for training and evaluating of LaLaLoc++ can be found here.

Citation

BiBTeX:

@article{howard2022lalaloc++,

title={LaLaLoc++: Global Floor Plan Comprehension for Layout Localisation in Unvisited Environments},

author={Howard-Jenkins, Henry and Prisacariu, Victor Adrian},

booktitle={Proceedings of the European Conference on Computer Vision},

pages={},

year={2022}

}